I've elaborated in my previous post on why I think predictive capability is crucial for an intelligent agent and how we get fooled by getting 90% of motor commands right from a purely reactive system. This also relates to a way of thinking of the problem in terms of either statistics or dynamics. The current mainstream (statistical majority) is focused on statistics and that statistically works. However much like with guiding behavior, statistical majority may omit important outliers - important information is often hidden in the tail of the distribution.

I've mentioned the Predictive Vision Model which is our (me and a few colleagues that think alike) way to introduce predictive paradigm into machine learning. It is described in a lengthy paper, but not everyone has the time to go through it, so I will briefly describe the principles here:

Idea

The idea is to create a predictive model of the sensory input (in this case visual). Since we don't know the equations of motion of the sensory values, the way to do it is via machine learning - simply associate values of inputs now with those same values in the future (think of something like an autoencoder but predicting not the signal itself but the next frame of the signal) . Once that association is created we can use the resulting system to predict next values of input. In fact we can train and use the system at the same time. The resulting system will obviously not represent the outside reality "as it really is", but will generate an approximation. In many cases such approximation can likely be enough to generate very robust behavior.

Additional details

Building a predictive encoder like above in itself is easy. The problem arises with scaling. This can sometimes be addressed by building a system out of small pieces and scaling their number rather than by trying to build a giant entity at once. We apply this philosophy here: instead of creating a giant predictive encoder to associate big pictures, we create many small predictive encoders, each working with a small patch of input, as shown in diagram below:

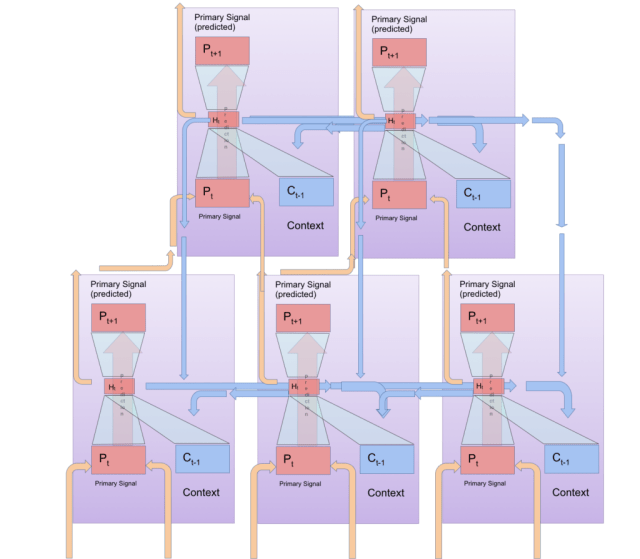

So we have a "distributed" predictive system, but this is not great yet. Each unit does prediction on its own, whereas the signal they are processing has some global coherence. Is there any way to improve the situation? We can try to wire those units together, such that each units informs their neighbours on what they just saw/predicted. This sounds great, but if we now start sending multiple copies of signal to each unit, the unit will grow enormously and soon we will not be able to scale the system. Instead we introduce compression, much like with a denosing autoencoder, we force each unit not only to predict, but to predict using only the essential features. We achieve that by introducing a bottleneck (narrowing the middle layer). Once this is done, we can wire the "compressed" representation to neighbouring units as lateral connections:

Now each unit can make their prediction with a bit if awareness about other nearby units and their signals. Given the fact that sensory data typically has some "locality", those nearby signals can bring additional information helpful in predicting what will happen. Note that even though the system now is sparsely wired (local connectivity), it is scalable in the sense that we can add more and more units (process images at higher resolution) and the total convergence time will remain unaffected (assuming we add compute power proportional to the number of added units).

Hierarchy and feedback

We have a layer of units which predict their future inputs, what can we do with it? The problem is that we convey prediction only on very fine scale, the system, even with lateral feedback cannot discover any regularities happening on a large scale. For large scale regularities we would have to have a unit processing the entire scene, which we want to avoid because it is not scalable. But notice that we are already compressing, so if we add another layer (now predicting the compressed features of the first layer), each unit in the next layer will have access to a larger visual field (although deprived of functions which turned out not to be useful for prediction at the lower level):

OK, so now the higher level units may discover larger scale regularities, and we can add more layers until we are left with one unit that captures the entire scene (although at a very coarse "resolution"). What can we do with those additional predictions? Well, for one thing we can send them back as feedback, as they can only help the lower level units to predict better:

We now arrive at a fully recurrent system (note we dropped the word "lateral" from the context, as it now includes also top down feedback). Each unit has its clear objective function (prediction). The error is injected into the system in a distributed way (not a single backpropagated label), system remains scalable. This in principle is the generic PVM - nothing fancy, just associative memories arranged in a new way. The objective is to predict, and this system will be able to do it if it creates an internal model of input signal. The system can create a quite sophisticated model because of all the recurrent connections. Animated flow of information:

Consequences

OK, so now that we have it, can we elaborate on what it can do? Before we jump to that, let me state a few important observations:

- PVM uses associative memory units with compression. They can be implemented with backprop or in any other way: Boltmann Machine, spiking network, whatever you like. This gives the system enormous flexibility in terms of hardware implementations.

- The system is distributed and totally bypasses the vanishing gradient problem, because the training signal is local, always strong and plentiful. Hence no need for tricks such a convolution, fancy regularisation and so on.

- PVM is used for vision but any modality is fine. In fact you could liberally wire modalities such that they could co-predict each other at different levels of abstraction.

- Feedback in PVM can be wired liberally and cannot mess things up. If signals are predictive, they will be used, if not they will be ignored (this is the worst that can happen)

- "Signal" in PVM may refer to a single snapshot (say visual frame) or a sequence. In fact I did some promising experiments with processing several visual frames.

- If parts of the signal are corrupted in a predictable way (say dead pixels on a camera), they will be predicted at the low level of processing, and capacity devoted to their representation for upper layers will be minimised. In the extreme case of e.g. constantly inactive pixels, they can be predicted solely from the bias unit (constant) and their existence is totally ignored by the upper layers (much like the blindspot in the human eye).

- Since the system operates online and is robust to errors (see comment above) there is a possibility for units to be asynchronous. If they constantly work at different speeds, properties of their signals are just part of the reality to be predicted by the downstream units. Temporal glitches may not improve things, but will certainly not cause a disaster. Avoiding global synchronisation is important when scaling things up to massive numbers, see Amdahl's law.

- The system as a byproduct generates the prediction error, which is essentially an anomaly detection signal. Since it operates at many scales, it can report anomalies at different level of abstraction. This is a behaviourally useful signal and relates to the concept of saliency and attention.

Does it work and how to use it

PVM does work in its primary predictive task. But can it do anything else? For one, the prediction error is very important for an agent to direct their cognitive resources. But that is long term, for now we decided to add a supervised task of visual object tracking and test the PVM on that.

We added several blows and whistles to our PVM unit:

- we made the unit itself recurrent (its own previous state becomes a part of the context). In that sense PVM unit resembles the simple recurrent neural network. One can put LSTM there as well, but I don't really like LSTMs as they seem very "unnatural", and actually think in this case it should not be necessary (I will elaborate on that in one of the next posts).

- we added several "pre-computed features" to our input vector. The features are there just to help the simple three layer perceptron to find relevant patterns.

- we added an additional readout layer where via explicitly supervised training (now with labeled data, the box with "M") we could train the heatmap of object of interest. Much like everything else in PVM, that heatmap is produced in a distributed manner by all the units and later on combined to compute the bounding box.

And the schema for heatmap generation:

Long story short: it works. The details are available in our lengthy paper, but generally we can train this system for pretty robust visual object tracking and it beats several state of the art trackers. Here is an instance of the system running:

The top row are from left: input signal (visual), internal compressed activations of the subsequent layers. Second row: consecutive predictions, first later predicts the visual input, second layers predicts the first layer activations and so on. Third row is the error (difference between the signal and prediction). Fourth row is the supervised object heatmap (this particular system is sensitive to the stop sign). Rightmost column: various tracking visualisations.

And here are a few examples of object tracking from the test set (note, we never evaluate the system on the training set, the only thing that matters is generalisation). The red box is human labeled ground truth used for evaluation and the yellow box is what is returned by the PVM tracker. Overall it is pretty impressive, particularly in that it works with a low resolution (96x96) video (still enough resolution though for humans to understand very well what is in the scene).

Conclusions

So unlike deep convolutional networks which were conceived in the late 1980-ies - based on the neuroscience of the 60's, PVM is actually something completely new - based on more contemporary findings about the function and structure of neocortex. It is not so much a new associative memory, but rather a new way to use existing associative memories. PVM bypasses numerous problems plaguing other machine learning models, such as overfitting (because of the unsupervised paradigm there is wealth of training data and overfitting is very unlikely) or vanishing gradient (because of the local and strong error signal). It does that not by using some questionable tricks (such as convolution or dropout regularisation), but by restricting the task to online signal prediction. In that sense PVM is not a general blackbox that can be used for anything (it is not clear if such black box even exists). However PVM can be used for many applications in which the current methods struggle, particularly in perception for autonomous devices, where anticipation and a rough model of reality will be crucial. As with many new things though, PVM has a great challenge ahead - it has to be shown better then conventional deep learning - which especially given huge resources works very well in many niches. In machine learning the methodology has slowly evolved to be extremely benchmark focused, and the best way to succeed with such methodology is to incrementally build upon stuff that already works very well. Although this may guarantee academic success, it also guarantees getting stuck in a local minimum (which in case of deep learning is pretty deep). PVM is different in that it is driven by intuition on what would be necessary to make robotics really work in the long run as well as evidence from neuroscience.

The code for the current implementation of PVM is available on github, it is a joy to play with (though needs a beefy CPU, at the current implementation does not use GPU).

If you found an error, highlight it and press Shift + Enter or click here to inform us.