In the previous post I applied an off the shelf deep net to get an idea how it performs on average street/office video. The purpose of this exercise was to critically examine and reveal what these award winning models are actually like. The results were a mixed bag. The network was able to capture the gist of the scene, but made serious mistakes every once in a while. Granted the model I used for that experiment was trained on ImageNet which has a few biases and is probably not the best set to test "visual capabilities in the real world". In the the current post I will discuss another problem which is plaguing deep learning models - adversarial stimuli.

Deep nets can be made to fail on purpose. It's been first shown in [1] and there have been quite a few papers since then with different methods to construct stimuli that fool deep models. In the simplest case one can directly derive these stimuli from the network itself. Since ConvNets are purely feedforward systems (most of them at least), we can trace back the gradients. Typically gradients are used to modify the weights such that they better fit the given example (training process). But one can do something different: keep the weights frozen and instead use the gradients to modify the input such that it appears to the network more like a given category. Nothing stands in the way of arbitrarily choosing a category and projecting gradients on random pictures.

Now the scary part is that it works. And the most scary is that it works very well. It is often enough to modify the picture just a tiny bit (not even perceivable to a human in most cases) in order to make the network confidently (very confidently!) report something which is clearly not there. Now there are several papers reporting this phenomenon and code available online (see below). In one paper the adversarial examples were even printed out and reacquired with a camera and often still managed to fool the network. Moreover these adversarial examples appear to generalise across models with varying architectures and trained on different datasets! Consequently it appears the problem is more serious than just a few holes in one particular network - it affects the entire family of models.

I've decided to play with this a little myself (admittedly it is quite addicting to fool these networks). I used Keras for my little experiment below, based on an excellent blog post by Francois Chollet and a great blog post here by Julia Evans (bug me if you want me to publish the code). Several examples below created from the VGG-16 model:

Exhibit 1. Original image classified with VGG-16.

Exhibit 2. Selection of adversarial examples generated for the image above.

Exhibit 3. Original image classified with VGG-16.

Exhibit 4. Selection of adversarial examples generated for the image above.

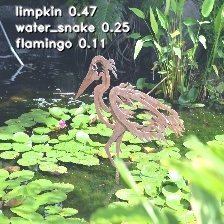

Exhibit 5. Original image classified with VGG-16.

Exhibit 6. Selection of adversarial examples generated for the image above.

And here are examples of adversarial cases derived from a grey image which give an idea of what these networks are sensitive to:

Exhibit 7. Adversarial examples generated from a grey image.

The existence of adversarial examples is described as a security threat for applications of deepnets. I would say that this is just the tip of the iceberg and reveals something much more important. Something that most people don't realize yet - the level of perception of these systems is rudimentary. The perception is based on isolated and disconnected textures and low level features without any regard for actual precise spatial composition (which should not be surprising since pooling loses spatial information) of these features, light or shadows, apparent 3d structure, dynamical context and so on. What we have today may be enough to win some competitions, roughly sort through pictures in a picture library or improve web search. But that is NOT enough for any application involving guiding a behaviour of an autonomous device like a robot or a self driving car. As I mentioned, a fully autonomous car requires substantial understanding of the surrounding reality and cannot possibly be fooled into thinking that a pattern of dust on the windshield is an indian elephant. Some of those aspects can be addressed by fusing multiple sensors such as radar/lidar and so on. That however does not solve the problem entirely either - even if the car knows where the obstacles are in space, it still needs to understand what the situation is and how to properly react given all the spatial, temporal and social context (even with 99% performance there is still plenty room for corner cases). This is a really difficult task to accomplish.

Now going back to the main topic, there are attempts to build systems where adversarial examples are used for training - where one model tried to break the other - one model generates examples that the other fails to classify. This may certainly improve things a bit, likely find the "weakest points" of the network, but the core vulnerability remains. In my personal opinion the fact alone that these networks are feedforward is both what allows us to train them in the first place and what causes the adversarial problems. In addition there are almost certainly a lot more adversarial stimuli than non adversarial ones in the (astronomically large) space of all images, so adversarial training will never be able to patch all the holes.

Human vision is also not perfect since generally reconstructing the content of a 3d scene based on 2d projection is under-constrained. But optical illusions that fool humans are completely different then the adversarial examples above. To me, this indicates that we might be on a wrong path with vision altogether. It would be a lot more reassuring if our models were fooled by the same illusions as we do.

It is easy perhaps to spot a problem, but not so easy to fix it. In order to not be accused of naysaying, I (with a few colleagues) did put forward a new approach to vision (and in fact perception in general) in a recent paper (see also this post). Our approach is fully recurrent, based on associative memory components which can be implemented in a number of ways (not necessarily backprop!) and scalable. The problem with deep nets is that there has been a substantial investment in the infrastructure and hype, so people are not willing to easily admit that we might be in a dead end. I think we squeezed out just about everything from the Neocognitron (on deep steroids), and it is time to move on. I hope I'll convince as many people to that idea as possible.

In the next post I will expand a bit on what I think is the problem in solving perception for an artificial system, namely the fact that we are blind to how our own perception works and can't really "see" where the problem is. It is a dangerous mixture of AI and human psychology but hey, we are intelligent beings trying to build artificial intelligence! We have to be really careful on what we do, how we introspect and whether or not we are fooling ourselves.

If you found an error, highlight it and press Shift + Enter or click here to inform us.

15 thoughts on “Adversarial red flag”

Comments are closed.